Last week, Ivo Façoco, Maria Russo, Pedro Matias, and Luís Rosado from Fraunhofer Portugal AICOS, introduced the pyMDMA Tutorial at #IbPRIA2025: 12th Iberian Conference on Pattern Recognition and Image Analysis, an open-source Python library designed to support the comprehensive auditing of both real and synthetic datasets across multiple data modalities, including images, tabular data, and time series.

Developed to address limitations in existing data quality assessment tools, pyMDMA offers an extensive set of metrics, organized under a novel taxonomy that aids users in selecting appropriate evaluation methods based on their specific auditing goals. The framework aims to fill critical gaps in current practices, which often exclude certain modalities or fail to incorporate state-of-the-art metrics.

A Unified Approach to Multimodal Data Evaluation

pyMDMA stands out for its ability to audit data from diverse domains through a standardized codebase. This structure not only simplifies the integration of quality metrics but also promotes consistency in evaluations across modalities. The library categorizes metrics into four primary groups—quality, privacy, validity, and utility—and organizes them based on data modality (image, time series, tabular), validation domain (input or synthetic), and metric type (data-based, annotation-based, or feature-based).

By delivering additional statistical summaries for each metric, pyMDMA empowers users to draw clearer, more actionable insights from their assessments.

Addressing a Growing Need in the Era of Generative AI

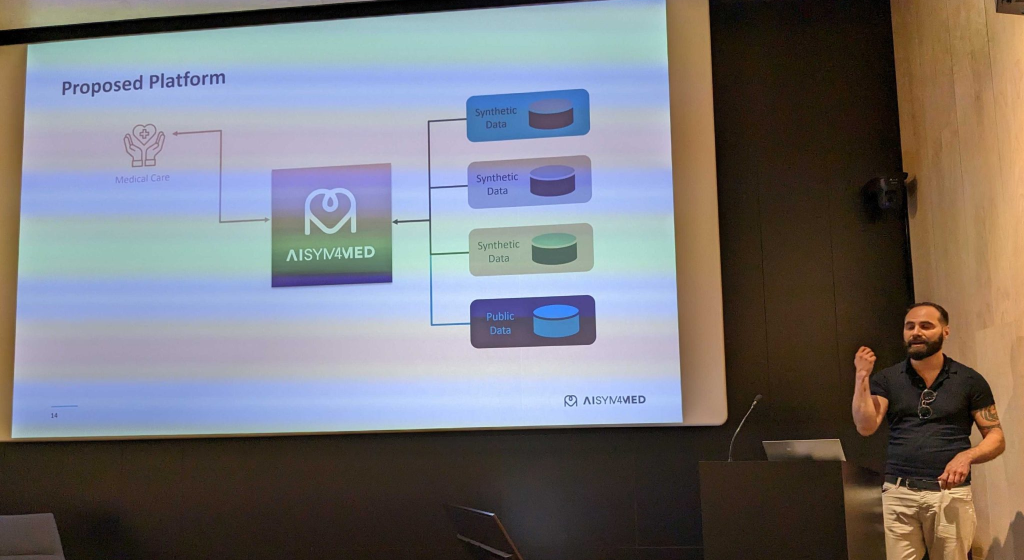

With the increasing use of synthetic data in applications ranging from industrial automation to healthcare, robust evaluation tools have become indispensable. pyMDMA responds to this need by supporting both input metrics—used to assess the quality of real-world data—and synthetic metrics, which evaluate the properties of generated datasets.

The framework is particularly relevant in scenarios where synthetic data is used to compensate for underrepresented cases in real datasets. Unlike many existing tools, pyMDMA facilitates cross-modality comparisons and helps researchers ensure the reliability and representativeness of their data.

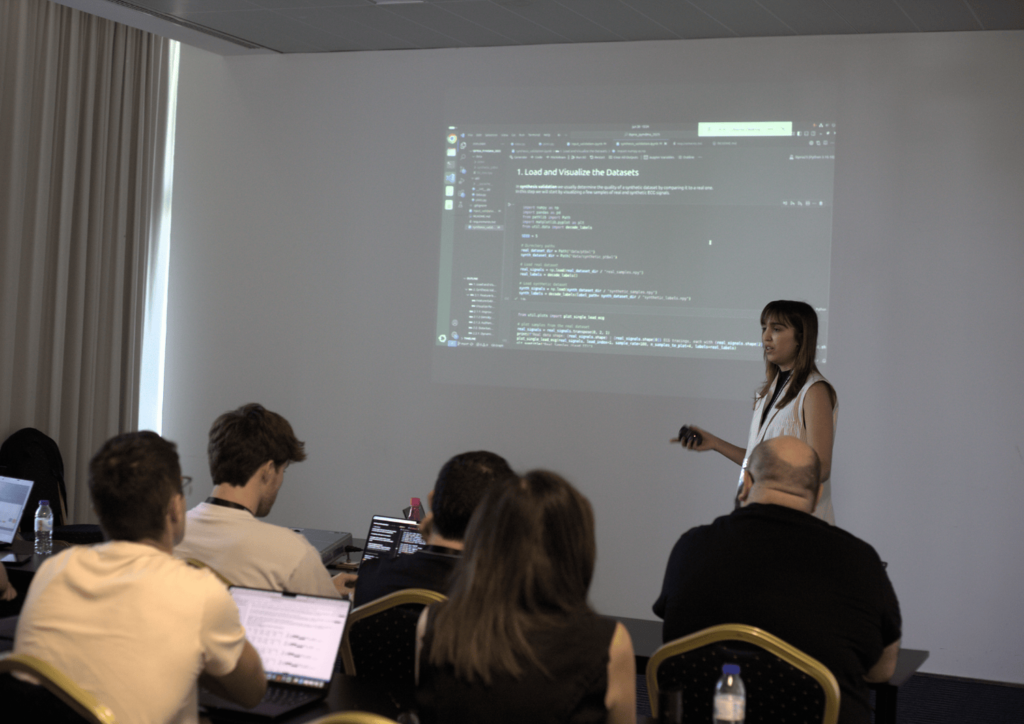

A Hands-On Learning Experience

To promote the practical adoption of the tool, the developers have structured an in-depth tutorial consisting of three interactive sessions. Each session targets one data modality—image, time series, or tabular—and includes hands-on exercises using Jupyter notebooks. These sessions guide participants through data preparation, quality evaluation, and interpretation of results for both real and synthetic datasets.

The tutorial was aimed at a broad audience, including data scientists, AI researchers, and auditors working with multimodal datasets. It offers valuable insights for professionals seeking to improve data quality assessment processes in research and real-world applications.

The framework is publicly available at GitHub.